@PersistenceCapable

public class Hotel

{

...

}JDO Mapping Guide (v5.2)

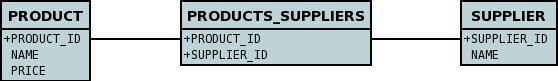

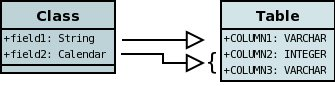

To implement a persistence layer with JDO you firstly need to map the classes and fields/properties that are involved in the persistence process to how they are represented in the datastore.

This can be as simple as marking the classes as @PersistenceCapable and defaulting the datastore definition, or you can configure down to the fine detail of precisely what schema it maps on to.

The following sections deal with the many options available for using metadata to map your persistable classes.

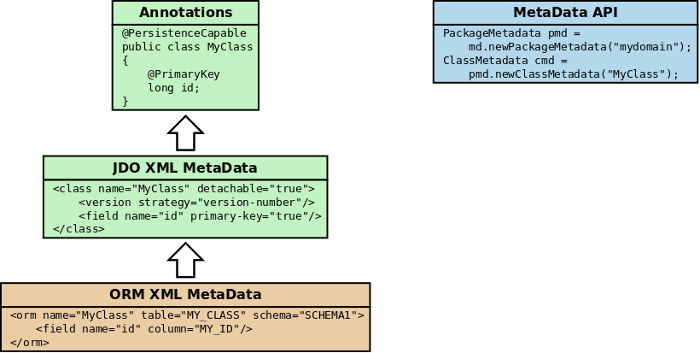

When mapping a class for JDO you make use of metadata, and this metadata can be Java annotations, or can be XML metadata, or a mixture of both, or you could even define it using a dynamic API. This is very much down to your own personal preference but we try to present both ways here.

| We advise trying to keep schema information out of annotations, so that you avoid tying compiled code to a specific datastore. That way you retain datastore-independence. This may not be a concern for your project however. |

Whilst the JDO spec provides for you to specify your mapping information using JDO metadata (JDO annotations,

or JDO/ORM XML Metadata, or via the Metadata API),

it also allows you the option of using JPA metadata (JPA annotations, orm.xml). This is provided as a way of easily migrating across to JDO from JPA, for example.

Consult the DataNucleus JPA mappings docs for details.

|

In terms of the relative priority of annotations, JDO XML and ORM XML metadata, the following figure highlights the process

So you can provide the metadata via JDO annotations solely, or via JDO annotations plus ORM XML Metadata overrides, or via JDO XML Metadata solely, or via JDO XML Metadata plus ORM XML Metadata overrides, or finally via a Metadata API.

If you are using XML overrides for ORM, this definition will be merged in to the base definition (JDO annotations or JDO XML Metadata). Note that you can utilise JDO annotations for one class, and then JDO XML Metadata for another class should you so wish.

One further alternative is if you have annotations in your classes, you provide JDO XML Metadata (package.jdo), and also

ORM XML Metadata (package-{mapping}.orm). In this case the annotations are the base representation, applying overrides from JDO XML Metadata, and then overrides from the ORM XML Metadata.

| When not using the MetaData API we recommend that you use either XML or annotations for the basic persistence information, but always use XML for schema information. This is because it is liable to change at deployment time and hence is accessible when in XML form whereas in annotations you add an extra compile cycle (and also you may need to deploy to some other datastore at some point, hence needing a different deployment). |

Classes

We have the following types of classes in DataNucleus JDO.

-

PersistenceCapable - persistable class with full control over its persistence.

-

PersistenceAware - a class that is not itself persisted, but that needs to access internals of persistable classes.

JDO imposes very little on classes used within the persistence process so, to a very large degree, you should design your classes as you would normally do and not design them to fit JDO.

| In strict JDO all persistable classes need to have a default constructor. With DataNucleus JDO this is not necessary, since all classes are enhanced before persistence and the enhancer adds on a default constructor if one is not defined. |

| If defining a method toString in a JDO persistable class, be aware that use of a persistable field will cause the load of that field if the object is managed and is not yet loaded. |

| If a JDO persistable class is an element of a Java collection in another entity, you are advised to define hashCode and equals methods for reliable handling by Java collections. |

Persistence Capable Classes

The first thing to decide when implementing your persistence layer is which classes are to be persisted. Let’s take a sample class (Hotel) as an example. We can define a class as persistable using either annotations in the class, or XML metadata. To achieve the above aim we do this

or using XML metadata

<class name="Hotel">

...

</class>See also :-

| If any of your other classes access the fields of these persistable classes directly then these other classes should be defined as PersistenceAware. |

Persistence-Aware Classes

If a class is not itself persistable but it interacts with fields of persistable classes then it should be marked as Persistence Aware. You do this as follows

@PersistenceAware

public class MyClass

{

...

}or using XML metadata

<class name="MyClass" persistence-modifier="persistence-aware">

...

</class>See also :-

Read-Only Classes

You can, if you wish, make a class "read-only". This is a DataNucleus extension and you set it as follows

import org.datanucleus.api.jdo.annotations.ReadOnly;

@PersistenceCapable

@ReadOnly

public class MyClass

{

...

}or using XML Metadata

<class name="MyClass">

...

<extension vendor-name="datanucleus" key="read-only" value="true"/>

</class>In practical terms this means that at runtime, if you try to persist an object of this type then an exception will be thrown. You can read objects of this type from the datastore just as you would for any persistable class

See also :-

Detachable Classes

One of the main things you need to decide for you persistable classes is whether you will be detaching them from the persistence process for use in a different layer of your application. If you do want to do this then you need to mark them as detachable, like this

@PersistenceCapable(detachable="true")

public class Hotel

{

...

}or using XML metadata

<class name="Hotel" detachable="true">

...

</class>SoftDelete

| Applicable to RDBMS, MongoDB, HBase, Cassandra, Neo4j |

With standard JDO when you delete an object from persistence it is deleted from the datastore. DataNucleus provides a useful ability to soft delete objects from persistence. In simple terms, any persistable types marked for soft deletion handling will have an extra column added to their datastore table to represent whether the record is soft-deleted. If it is soft deleted then it will not be visible at runtime thereafter, but will be present in the datastore.

You mark a persistable type for soft deletion handling like this

import org.datanucleus.api.jdo.annotations.SoftDelete;

@PersistenceCapable

@SoftDelete

public class Hotel

{

...

}You could optionally specify the column attribute of the @SoftDelete annotation to define the column name where this flag is stored.

If you instead wanted to define this in XML then do it like this

<class name="Hotel">

<extension vendor-name="datanucleus" key="softdelete" value="true"/>

<extension vendor-name="datanucleus" key="softdelete-column-name" value="DELETE_FLAG"/>

...

</class>Whenever any objects of type Hotel are deleted, like this

pm.deletePersistent(myHotel);the myHotel object will be updated to set the soft-delete flag to true.

Any call to pm.getObjectById or query will not return the object since it is effectively deleted (though still present in the datastore).

If you want to view the object, you can specify the query extension datanucleus.query.includeSoftDeletes as true and the soft-deleted records will be visible.

This feature is still undergoing development, so not all aspects are feature complete.

See also :-

Inheritance

In Java it is a normal situation to have inheritance between classes. With JDO you have choices to make as to how you want to persist your classes for the inheritance tree. For each class you select how you want to persist that classes information. You have the following choices.

-

The first and simplest to understand option is where each class has its own table in the datastore. In JDO this is referred to as new-table.

-

The second way is to select a class to have its fields persisted in the table of its subclass. In JDO this is referred to as subclass-table

-

The third way is to select a class to have its fields persisted in the table of its superclass. In JDO this is known as superclass-table

-

The final way is for all classes in an inheritance tree to have their own table containing all fields. This is known as complete-table and is enabled by setting the inheritance strategy of the root class to use this.

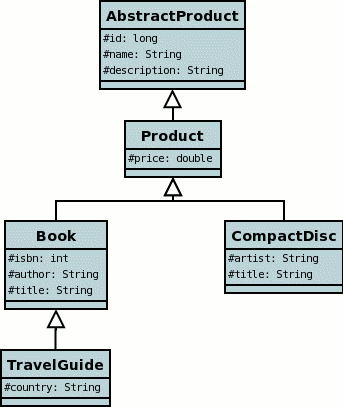

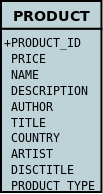

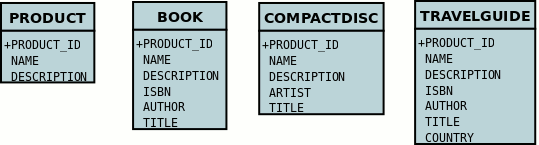

In order to demonstrate the various inheritance strategies we need an example. Here are a few simple classes representing products in a (online) store. We have an abstract base class, extending this to to provide something that we can represent any product by. We then provide a few specialisations for typical products. We will use these classes later when defining how to persistent these objects in the different inheritance strategies.

JDO imposes a "default" inheritance strategy if none is specified for a class. If the class is a base class and no inheritance strategy is specified then it will be set to new-table for that class. If the class has a superclass and no inheritance strategy is specified then it will be set to superclass-table. This means that, when no strategy is set for the classes in an inheritance tree, they will default to using a single table managed by the base class.

You can control the "default" strategy chosen by way of the persistence property datanucleus.defaultInheritanceStrategy. The default is JDO2 which will give the above default behaviour for all classes that have no strategy specified. The other option is TABLE_PER_CLASS which will use "new-table" for all classes which have no strategy specified

| At runtime, when you start up your PersistenceManagerFactory, JDO will only know about the classes that the persistence API has been introduced to via method calls. To alleviate this, particularly for subclasses of classes in an inheritance relationship, you should make use of one of the many available Auto Start Mechanisms |

| You must specify the identity of objects in the root persistable class of the inheritance hierarchy. You cannot redefine it down the inheritance tree |

See also :-

Discriminator

| Applicable to RDBMS, HBase, MongoDB |

A discriminator is an extra "column" stored alongside data to identify the class of which that information is part. It is useful when storing objects which have inheritance to provide a quick way of determining the object type on retrieval. There are two types of discriminator supported by JDO

-

class-name : where the actual name of the class is stored as the discriminator

-

value-map : where a (typically numeric) value is stored for each class in question, allowing simple look-up of the class it equates to

You specify a discriminator as follows

<class name="Product">

<inheritance>

<discriminator strategy="class-name"/>

</inheritance>

...

</class>or with annotations

@PersistenceCapable

@Discriminator(strategy=DiscriminatorStrategy.CLASS_NAME)

public class Product {...}Alternatively if using value-map strategy then you need to provide the value map for all classes in the inheritance tree that will be persisted in their own right.

@PersistenceCapable

@Discriminator(strategy=DiscriminatorStrategy.VALUE_MAP, value="PRODUCT")

public class Product {...}

@PersistenceCapable

@Discriminator(value="BOOK")

public class Book {...}

...New Table

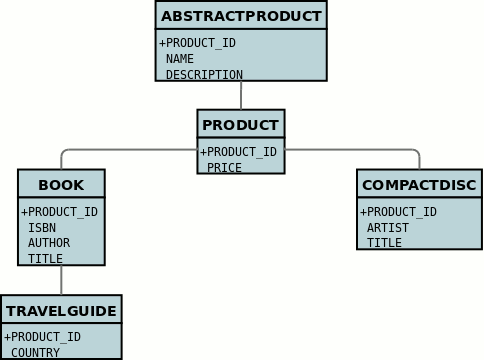

| Applicable to RDBMS |

Here we want to have a separate table for each class. This has the advantage of being the most normalised data definition. It also has the disadvantage of being slower in performance since multiple tables will need to be accessed to retrieve an object of a sub type. Let’s try an example using the simplest to understand strategy new-table. We have the classes defined above, and we want to persist our classes each in their own table. We define the Meta-Data for our classes like this

<class name="AbstractProduct">

<inheritance strategy="new-table"/>

<field name="id" primary-key="true">

<column name="PRODUCT_ID"/>

</field>

...

</class>

<class name="Product">

<inheritance strategy="new-table"/>

...

</class>

<class name="Book">

<inheritance strategy="new-table"/>

...

</class>

<class name="TravelGuide">

<inheritance strategy="new-table"/>

...

</class>

<class name="CompactDisc">

<inheritance strategy="new-table"/>

...

</class>or with annotations

@PersistenceCapable

@Inheritance(strategy=InheritanceStrategy.NEW_TABLE)

public abstract class AbstractProduct {...}

@PersistenceCapable

@Inheritance(strategy=InheritanceStrategy.NEW_TABLE)

public class Product {...}

@PersistenceCapable

@Inheritance(strategy=InheritanceStrategy.NEW_TABLE)

public class Book {...}

@PersistenceCapable

@Inheritance(strategy=InheritanceStrategy.NEW_TABLE)

public class TravelGuide {...}

@PersistenceCapable

@Inheritance(strategy=InheritanceStrategy.NEW_TABLE)

public class CompactDisc {...}We use the inheritance element to define the persistence of the inherited classes.

In the datastore, each class in an inheritance tree is represented in its own datastore table (tables ABSTRACTPRODUCT, PRODUCT, BOOK, TRAVELGUIDE, and COMPACTDISC),

with the subclasses tables' having foreign keys between the primary key and the primary key of the superclass' table.

In the above example, when we insert a TravelGuide object into the datastore, a row will be inserted into ABSTRACTPRODUCT, PRODUCT, BOOK, and TRAVELGUIDE.

Subclass table

| Applicable to RDBMS |

DataNucleus supports persistence of classes in the tables of subclasses where this is required. This is typically used where you have an abstract base class and it doesn’t make sense having a separate table for that class. In our example we have no real interest in having a separate table for the AbstractProduct class. So in this case we change one thing in the Meta-Data quoted above. We now change the definition of AbstractProduct as follows

<class name="AbstractProduct">

<inheritance strategy="subclass-table"/>

<field name="id" primary-key="true">

<column name="PRODUCT_ID"/>

</field>

...

</class>or with annotations

@PersistenceCapable

@Inheritance(strategy=InheritanceStrategy.SUBCLASS_TABLE)

public abstract class AbstractProduct {...}This subtle change of use the inheritance element has the effect of using the PRODUCT table for both the Product and AbstractProduct classes, containing the fields of both classes.

In the above example, when we insert a TravelGuide object into the datastore, a row will be inserted into PRODUCT, BOOK, and TRAVELGUIDE.

| DataNucleus doesn’t currently fully support the use of classes defined with subclass-table strategy as having relationships where there are more than a single subclass that has a table. If the class has a single subclass with its own table then there should be no problem. |

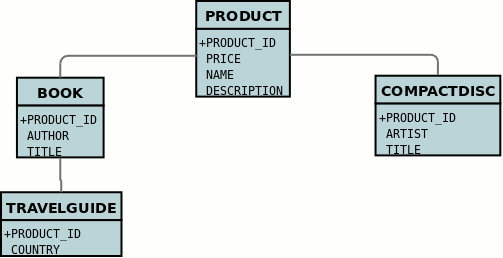

Superclass table

| Applicable to RDBMS |

DataNucleus supports persistence of classes in the tables of superclasses where this is required.

This has the advantage that retrieval of an object is a single SQL call to a single table.

It also has the disadvantage that the single table can have a very large number of columns, and database readability and performance can suffer, and additionally

that a discriminator column is required. In our example, lets ignore the AbstractProduct class for a moment and assume that Product is the base class.

We have no real interest in having separate tables for the Book and CompactDisc classes and want everything stored in a single table PRODUCT.

We change our MetaData as follows

<class name="Product">

<inheritance strategy="new-table">

<discriminator strategy="class-name">

<column name="PRODUCT_TYPE"/>

</discriminator>

</inheritance>

<field name="id" primary-key="true">

<column name="PRODUCT_ID"/>

</field>

...

</class>

<class name="Book">

<inheritance strategy="superclass-table"/>

...

</class>

<class name="TravelGuide">

<inheritance strategy="superclass-table"/>

...

</class>

<class name="CompactDisc">

<inheritance strategy="superclass-table"/>

...

</class>or with annotations

@PersistenceCapable

@Inheritance(strategy=InheritanceStrategy.NEW_TABLE)

public abstract class AbstractProduct {...}

@PersistenceCapable

@Inheritance(strategy=InheritanceStrategy.SUPERCLASS_TABLE)

public class Product {...}

@PersistenceCapable

@Inheritance(strategy=InheritanceStrategy.SUPERCLASS_TABLE)

public class Book {...}

@PersistenceCapable

@Inheritance(strategy=InheritanceStrategy.SUPERCLASS_TABLE)

public class TravelGuide {...}

@PersistenceCapable

@Inheritance(strategy=InheritanceStrategy.SUPERCLASS_TABLE)

public class CompactDisc {...}This change of use of the inheritance element has the effect of using the PRODUCT table for all classes, containing the fields of Product, Book, CompactDisc, and TravelGuide.

You will also note that we used a discriminator element for the Product class.

The specification above will result in an extra column (called PRODUCT_TYPE) being added to the PRODUCT table, and containing the class name of the object stored.

So for a Book it will have "com.mydomain.samples.store.Book" in that column. This column is used in discriminating which row in the database is of which type.

The final thing to note is that in our classes Book and CompactDisc we have a field that is identically named.

With CompactDisc we have defined that its column will be called DISCTITLE since both of these fields will be persisted into the same table and would have had

identical names otherwise - this gets around the problem.

In the above example, when we insert a TravelGuide object into the datastore, a row will be inserted into the PRODUCT table only.

JDO allows two types of discriminators. The example above used a discriminator strategy of class-name. This inserts the class name into the discriminator column so that we know what the class of the object really is. The second option is to use a discriminator strategy of value-map. With this we will define a "value" to be stored in this column for each of our classes. The only thing here is that we have to define the "value" in the MetaData for ALL classes that use that strategy. So to give the equivalent example :-

<class name="Product">

<inheritance strategy="new-table">

<discriminator strategy="value-map" value="PRODUCT">

<column name="PRODUCT_TYPE"/>

</discriminator>

</inheritance>

<field name="id" primary-key="true">

<column name="PRODUCT_ID"/>

</field>

...

</class>

<class name="Book">

<inheritance strategy="superclass-table">

<discriminator value="BOOK"/>

</inheritance>

...

</class>

<class name="TravelGuide">

<inheritance strategy="superclass-table">

<discriminator value="TRAVELGUIDE"/>

</inheritance>

...

</class>

<class name="CompactDisc">

<inheritance strategy="superclass-table">

<discriminator value="COMPACTDISC"/>

</inheritance>

...

</class>As you can see from the MetaData DTD it is possible to specify the column details for the discriminator. DataNucleus supports this, but only currently supports the following values of jdbc-type : VARCHAR, CHAR, INTEGER, BIGINT, NUMERIC. The default column type will be a VARCHAR.

Complete table

| Applicable to RDBMS, Neo4j, NeoDatis, Excel, OOXML, ODF, HBase, JSON, AmazonS3, GoogleStorage, MongoDB, LDAP |

With "complete-table" we define the strategy on the root class of the inheritance tree and it applies to all subclasses. Each class is persisted into its own table, having columns for all fields (of the class in question plus all fields of superclasses). So taking the same classes as used above

<class name="Product">

<inheritance strategy="complete-table"/>

<field name="id" primary-key="true">

<column name="PRODUCT_ID"/>

</field>

...

</class>

<class name="Book">

...

</class>

<class name="TravelGuide">

...

</class>

<class name="CompactDisc">

...

</class>or with annotations

@PersistenceCapable

@Inheritance(strategy=InheritanceStrategy.COMPLETE_TABLE)

public abstract class AbstractProduct {...}So the key thing is the specification of inheritance strategy at the root only. This then implies a datastore schema as follows

So any object of explicit type Book is persisted into the table BOOK.

Similarly any TravelGuide is persisted into the table TRAVELGUIDE.

In addition if any class in the inheritance tree is abstract then it won’t have a table since there cannot be any instances of that type.

DataNucleus currently has limitations when using a class using this inheritance as the element of a collection.

Retrieval of inherited objects

JDO provides particular mechanisms for retrieving inheritance trees. These are accessed via the Extent/Query interface. Taking our example above, we can then do

tx.begin();

Extent e = pm.getExtent(com.mydomain.samples.store.Product.class, true);

Query q = pm.newQuery(e);

Collection c=(Collection)q.execute();

tx.commit();The second parameter passed to pm.getExtent relates to whether to return subclasses. So if we pass in the root of the inheritance tree (Product in our case) we get all objects in this inheritance tree returned. You can, of course, use far more elaborate queries using JDOQL, but this is just to highlight the method of retrieval of subclasses.

Identity

All JDO-enabled persistable classes need to have an "identity" to be able to identify an object for retrieval and relationships. There are three types of identity defineable using JDO. These are

-

Nondurable Identity : the persistable type has no identity as such, so the only way to lookup objects of this type would be via query for values of specific fields. This is useful for storing things like log messages etc.

-

Datastore Identity : a surrogate column is added to the persistence of the persistable type, and objects of this type are identified by the class plus the value in this surrogate column.

-

Application Identity : a field, or several fields of the persistable type are assigned as being (part of) the primary key. A further complication is where you use application identity but one of the fields forming the primary key is a relation field. This is known as Compound Identity.

| When you have an inheritance hierarchy, you should specify the identity type in the base instantiable class for the inheritance tree. This is then used for all persistent classes in the tree. This means that you can have superclass(es) without any identity defined but using subclass-table inheritance, and then the base instantiable class is the first persistable class which has the identity. |

| The JDO identity is not the same as the type of the field(s) marked as the primary key. The identity will always have an identity class name. If you specify the object-id class then it will be this, otherwise will use a built-in type. |

Nondurable Identity

| Applicable to RDBMS, ODF, Excel, OOXML, HBase, Neo4j, MongoDB. |

With nondurable identity your objects will not have a unique identity in the datastore. This type of identity is typically for log files, history files etc where you aren’t going to access the object by key, but instead by a different parameter. In the datastore the table will typically not have a primary key. To specify that a class is to use nondurable identity with JDO you would define metadata like this

@PersistenceCapable(identityType=IdentityType.NONDURABLE)

public class MyClass

{

...

}or using XML metadata

<class name="MyClass" identity-type="nondurable">

...

</class>What this means for something like RDBMS is that the table (or view) of the class will not have a primary-key.

Datastore Identity

| Applicable to RDBMS, ODF, Excel, OOXML, HBase, Neo4j, MongoDB, XML, Cassandra, JSON |

With datastore identity you are leaving the assignment of id’s to DataNucleus and your class will not have a field for this identity - it will be added to the datastore representation by DataNucleus. It is, to all extents and purposes, a surrogate key that will have its own column in the datastore. To specify that a class is to use datastore identity with JDO, you do it like this

@PersistenceCapable(identityType=IdentityType.DATASTORE)

public class MyClass

{

...

}or using XML metadata

<class name="MyClass" identity-type="datastore">

...

</class>So you are specifying the identity-type as datastore. You don’t need to add this because datastore is the default, so in the absence of any value, it will be assumed to be 'datastore'.

Datastore Identity : Generating identities

By choosing datastore identity you are handing the process of identity generation to the JDO implementation. This does not mean that you haven’t got any control over how it does this. JDO defines many ways of generating these identities and DataNucleus supports all of these and provides some more of its own besides.

Defining which one to use is a simple matter of specifying its metadata, like this

@PersistenceCapable

@DatastoreIdentity(strategy="sequence", sequence="MY_SEQUENCE")

public class MyClass

{

...

}or using XML metadata

<class name="MyClass" identity-type="datastore">

<datastore-identity strategy="sequence" sequence="MY_SEQUENCE"/>

...

</class>Some of the datastore identity strategies require additional attributes, but the specification is straightforward.

See also :-

-

Value Generation - strategies for generating ids

Datastore Identity : Accessing the Identity

When using datastore identity, the class has no associated field so you can’t just access a field of the class to see its identity. If you need a field to be able to access the identity then you should be using application identity. There are, however, ways to get the identity for the datastore identity case, if you have the object.

// Via the PersistenceManager

Object id = pm.getObjectId(obj);

// Via JDOHelper

Object id = JDOHelper.getObjectId(obj);You should be aware however that the "identity" is in a complicated form, and is not available as a simple integer value for example. Again, if you want an identity of that form then you should use application identity

Datastore Identity : Implementation

When implementing datastore identity all JDO implementations have to provide a public class that represents this identity. If you call pm.getObjectId(…) for a class using datastore identity you will be passed an object which, in the case of DataNucleus will be of type org.datanucleus.identity.OIDImpl. If you were to call "toString()" on this object you would get something like

1[OID]mydomain.MyClass

This is made up of :-

1 = identity number of this object

class-name

| The definition of this datastore identity is JDO implementation dependent. As a result you should not use the org.datanucleus.identity.OID class in your application if you want to remain implementation independent. |

DataNucleus allows you the luxury of being able to provide your own datastore identity class so you can have whatever formatting you want for identities. You can then specify the persistence property datanucleus.datastoreIdentityType to be such as kodo or xcalia which would replicate the types of datastore identity generated by former JDO implementations Solarmetric Kodo and Xcalia respectively.

Datastore Identity : Accessing objects by Identity

If you have the JDO identity then you can access the object with that identity like this

Object obj = pm.getObjectById(id);You can also access the object from the object class name and the toString() form of the datastore identity (e.g "1[OID]mydomain.MyClass") like this

Object obj = pm.getObjectById(MyClass.class, mykey);Application Identity

| Applicable to all datastores. |

With application identity you are taking control of the specification of id’s to DataNucleus. Application identity requires a primary key class (unless you have a single primary-key field in which case the PK class is provided for you), and each persistent capable class may define a different class for its primary key, and different persistent capable classes can use the same primary key class, as appropriate. With application identity the field(s) of the primary key will be present as field(s) of the class itself. To specify that a class is to use application identity, you add the following to the MetaData for the class.

<class name="MyClass" objectid-class="MyIdClass">

<field name="myPrimaryKeyField" primary-key="true"/>

...

</class>For JDO we specify the primary-key and objectid-class. The objectid-class is optional, and is the class defining the identity for this class (again, if you have a single primary-key field then you can omit it). Alternatively, if we are using annotations

@PersistenceCapable(objectIdClass=MyIdClass.class)

public class MyClass

{

@Persistent(primaryKey="true")

private long myPrimaryKeyField;

}See also :-

Application Identity : PrimaryKey Classes

When you choose application identity you are defining which fields of the class are part of the primary key, and you are taking control of the specification of id’s to DataNucleus. Application identity requires a primary key (PK) class, and each persistent capable class may define a different class for its primary key, and different persistent capable classes can use the same primary key class, as appropriate. If you have only a single primary-key field then there are built-in PK classes so you can forget this section.

| If you are thinking of using multiple primary key fields in a class we would urge you to consider using a single (maybe surrogate) primary key field instead for reasons of simplicity and performance. This also means that you can avoid the need to define your own primary key class. |

Where you have more than 1 primary key field, you would map the persistable class like this

<class name="MyClass" identity-type="application" objectid-class="MyIdClass">

...

</class>or using annotations

@PersistenceCapable(objectIdClass=MyIdClass.class)

public class MyClass

{

...

}You now need to define the PK class to use (MyIdClass). This is simplified for you because if you have only one

PK field then you don’t need to define a PK class and you only define it when you have a composite PK.

An important thing to note is that the PK can only be made up of fields of the following Java types

-

Primitives : boolean, byte, char, int, long, short

-

java.lang : Boolean, Byte, Character, Integer, Long, Short, String, Enum, StringBuffer

-

java.math : BigInteger

-

java.sql : Date, Time, Timestamp

-

java.util : Date, Currency, Locale, TimeZone, UUID

-

java.net : URI, URL

-

Persistable

Note that the types in bold are JDO standard types. Any others are DataNucleus extensions and, as always, check the specific datastore docs to see what is supported for your datastore.

Single PrimaryKey field

The simplest way of using application identity is where you have a single PK field, and in this case you use SingleFieldIdentity

mechanism.

This provides a PrimaryKey and you don’t need to specify the objectid-class. Let’s take an example

mechanism.

This provides a PrimaryKey and you don’t need to specify the objectid-class. Let’s take an example

public class MyClass

{

long id;

...

}<class name="MyClass" identity-type="application">

<field name="id" primary-key="true"/>

...

</class>or using annotations

@PersistenceCapable

public class MyClass

{

@PrimaryKey

long id;

...

}So we didn’t specify the JDO "objectid-class". You will, of course, have to give the field a value before persisting the object, either by setting it yourself, or by using a value-strategy on that field.

If you need to create an identity of this form for use in querying via pm.getObjectById() then you can create the identities in the following way

// For a "long" type :

javax.jdo.identity.LongIdentity id = new javax.jdo.identity.LongIdentity(myClass, 101);

// For a "String" type :

javax.jdo.identity.StringIdentity id = new javax.jdo.identity.StringIdentity(myClass, "ABCD");We have shown an example above for type "long", but you can also use this for the following

short, Short - javax.jdo.identity.ShortIdentity int, Integer - javax.jdo.identity.IntIdentity long, Long - javax.jdo.identity.LongIdentity String - javax.jdo.identity.StringIdentity char, Character - javax.jdo.identity.CharIdentity byte, Byte - javax.jdo.identity.ByteIdentity java.util.Date - javax.jdo.identity.ObjectIdentity java.util.Currency - javax.jdo.identity.ObjectIdentity java.util.Locale - javax.jdo.identity.ObjectIdentity

| It is however better not to make explicit use of these JDO classes and instead to just use the pm.getObjectById taking in the class and the value and then you have no dependency on these classes. |

PrimaryKey : Rules for User-Defined classes

If you wish to use application identity and don’t want to use the "SingleFieldIdentity" builtin PK classes then you must define a Primary Key class of your own. You can’t use classes like java.lang.String, or java.lang.Long directly. You must follow these rules when defining your primary key class.

-

the Primary Key class must be public

-

the Primary Key class must implement Serializable

-

the Primary Key class must have a public no-arg constructor, which might be the default constructor

-

the field types of all non-static fields in the Primary Key class must be serializable, and are recommended to be primitive, String, Date, or Number types

-

all serializable non-static fields in the Primary Key class must be public

-

the names of the non-static fields in the Primary Key class must include the names of the primary key fields in the JDO class, and the types of the common fields must be identical

-

the equals() and hashCode() methods of the Primary Key class must use the value(s) of all the fields corresponding to the primary key fields in the JDO class

-

if the Primary Key class is an inner class, it must be static

-

the Primary Key class must override the toString() method defined in Object, and return a String that can be used as the parameter of a constructor

-

the Primary Key class must provide a String constructor that returns an instance that compares equal to an instance that returned that String by the toString() method.

-

the Primary Key class must be only used within a single inheritence tree.

Please note that if one of the fields that comprises the primary key is in itself a persistable object then you have Compound Identity and should consult the documentation for that feature which contains its own example.

| Since there are many possible combinations of primary-key fields it is impossible for JDO to provide a series of builtin composite primary key classes. However the DataNucleus enhancer provides a mechanism for auto-generating a primary-key class for a persistable class. It follows the rules listed below and should work for all cases. Obviously if you want to tailor the output of things like the PK toString() method then you ought to define your own. The enhancer generation of primary-key class is only enabled if you don’t define your own class. |

| Your "id" class can store the target class name of the persistable object that it represents. This is useful where you want to avoid lookups of a class in an inheritance tree. To do this, add a field to your id-class called targetClassName and make sure that it is part of the toString() and String constructor code. |

PrimaryKey Example - Multiple Field

| Again, if you are thinking of using multiple primary key fields in a class we would urge you to consider using a single (maybe surrogate) primary key field instead for reasons of simplicity and performance. This also means that you can avoid the need to define your own primary key class. |

Here’s an example of a composite (multiple field) primary key class

@PersistenceCapable(objectIdClass=ComposedIdKey.class)

public class MyClass

{

@PrimaryKey

String field1;

@PrimaryKey

String field2;

...

}

public class ComposedIdKey implements Serializable

{

public String targetClassName; // DataNucleus extension, storing the class name of the persistable object

public String field1;

public String field2;

public ComposedIdKey ()

{

}

/**

* Constructor accepting same input as generated by toString().

*/

public ComposedIdKey(String value)

{

StringTokenizer token = new StringTokenizer (value, "::");

this.targetClassName = token.nextToken(); // className

this.field1 = token.nextToken(); // field1

this.field2 = token.nextToken(); // field2

}

public boolean equals(Object obj)

{

if (obj == this)

{

return true;

}

if (!(obj instanceof ComposedIdKey))

{

return false;

}

ComposedIdKey c = (ComposedIdKey)obj;

return field1.equals(c.field1) && field2.equals(c.field2);

}

public int hashCode ()

{

return this.field1.hashCode() ^ this.field2.hashCode();

}

public String toString ()

{

// Give output expected by String constructor

return this.targetClassName + "::" + this.field1 + "::" + this.field2;

}

}Application Identity : Generating identities

By choosing application identity you are controlling the process of identity generation for this class. This does not mean that you have a lot of work to do for this. JDO defines many ways of generating these identities and DataNucleus supports all of these and provides some more of its own besides.

See also :-

-

Value Generation - strategies for generating ids

Application Identity : Accessing the Identity

When using application identity, the class has associated field(s) that equate to the identity. As a result you can simply access the values for these field(s). Alternatively you could use a JDO identity-independent way

// Using the PersistenceManager

Object id = pm.getObjectId(obj);

// Using JDOHelper

Object id = JDOHelper.getObjectId(obj);Application Identity : Changing Identities

JDO allows implementations to support the changing of the identity of a persisted object. This is an optional feature and DataNucleus doesn’t currently support it.

Application Identity : Accessing objects by Identity

If you have the JDO identity then you can access the object with that identity like this

Object obj = pm.getObjectById(id);If you are using SingleField identity then you can access it from the object class name and the key value like this

Object obj = pm.getObjectById(MyClass.class, mykey);If you are using your own PK class then the mykey value is the toString() form of the identity of your PK class.

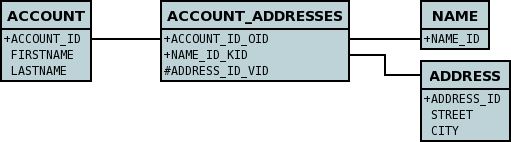

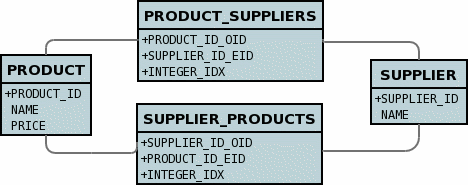

Compound Identity Relationships

A JDO "compound identity relationship" is a relationship between two classes in which the child object must coexist with the parent object and where the primary key of the child includes the persistable object of the parent. The key aspect of this type of relationship is that the primary key of one of the classes includes a persistable field (hence why is is referred to as Compound Identity). This type of relation is available in the following forms

| In the identity class of the compound persistable class you should define the object-idclass of the persistable type being contained and use that type in the identity class of the compound persistable type. |

| The persistable class that is contained cannot be using datastore identity, and must be using application identity with an objectid-class |

| When using compound identity, it is best practice to define an object-idclass for any persistable classes that are part of the primary key, and not rely on the built-in identity types. |

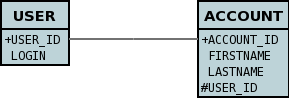

1-1 Relationship

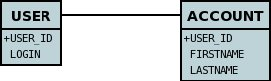

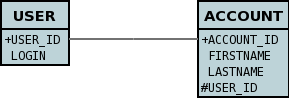

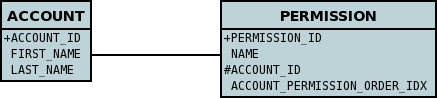

Lets take the same classes as we have in the 1-1 Relationships. In the 1-1 relationships guide we note that in the datastore representation of the User and Account the ACCOUNT table has a primary key as well as a foreign-key to USER. In our example here we want to just have a primary key that is also a foreign-key to USER. To do this we need to modify the classes slightly and add primary-key fields and use "application-identity".

public class User

{

long id;

...

}

public class Account

{

User user;

...

}In addition we need to define primary key classes for our User and Account classes

public class User

{

long id;

... (remainder of User class)

/**

* Inner class representing Primary Key

*/

public static class PK implements Serializable

{

public long id;

public PK()

{

}

public PK(String s)

{

this.id = Long.valueOf(s).longValue();

}

public String toString()

{

return "" + id;

}

public int hashCode()

{

return (int)id;

}

public boolean equals(Object other)

{

if (other != null && (other instanceof PK))

{

PK otherPK = (PK)other;

return otherPK.id == this.id;

}

return false;

}

}

}

public class Account

{

User user;

... (remainder of Account class)

/**

* Inner class representing Primary Key

*/

public static class PK implements Serializable

{

public User.PK user; // Use same name as the real field above

public PK()

{

}

public PK(String s)

{

StringTokenizer token = new StringTokenizer(s,"::");

this.user = new User.PK(token.nextToken());

}

public String toString()

{

return "" + this.user.toString();

}

public int hashCode()

{

return user.hashCode();

}

public boolean equals(Object other)

{

if (other != null && (other instanceof PK))

{

PK otherPK = (PK)other;

return this.user.equals(otherPK.user);

}

return false;

}

}

}To achieve what we want with the datastore schema we define the MetaData like this

<package name="mydomain">

<class name="User" identity-type="application" objectid-class="User$PK">

<field name="id" primary-key="true"/>

<field name="login" persistence-modifier="persistent">

<column length="20" jdbc-type="VARCHAR"/>

</field>

</class>

<class name="Account" identity-type="application" objectid-class="Account$PK">

<field name="user" persistence-modifier="persistent" primary-key="true">

<column name="USER_ID"/>

</field>

<field name="firstName" persistence-modifier="persistent">

<column length="50" jdbc-type="VARCHAR"/>

</field>

<field name="secondName" persistence-modifier="persistent">

<column length="50" jdbc-type="VARCHAR"/>

</field>

</class>

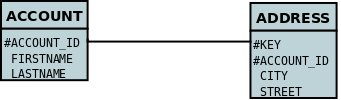

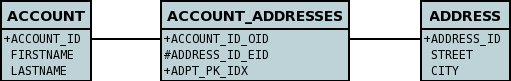

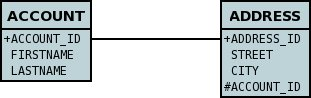

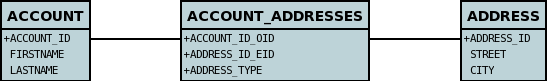

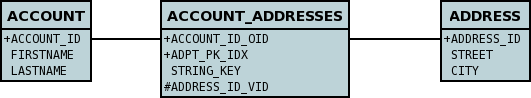

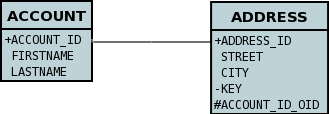

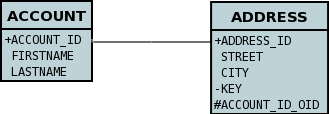

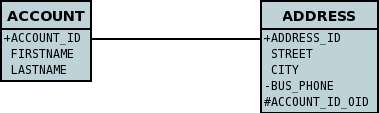

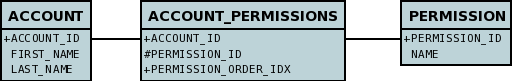

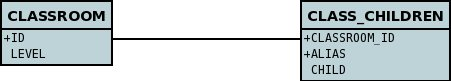

</package>So now we have the following datastore schema

Things to note :-

-

You must use "application-identity" in both parent and child classes

-

In the child Primary Key class, you must have a field with the same name as the relationship in the child class, and the field in the child Primary Key class must be the same type as the Primary Key class of the parent

-

See also the general instructions for Primary Key classes

-

You can only have one "Account" object linked to a particular "User" object since the FK to the "User" is now the primary key of "Account". To remove this restriction you could also add a "long id" to "Account" and make the "Account.PK" a composite primary-key

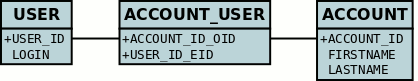

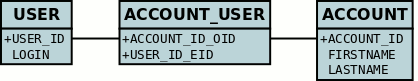

1-N Collection Relationship

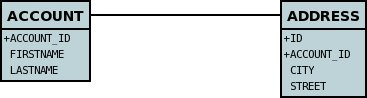

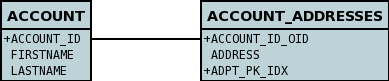

Lets take the same classes as we have in the 1-N Relationships (FK). In the 1-N relationships guide we note that in the datastore representation of the Account and Address classes the ADDRESS table has a primary key as well as a foreign-key to ACCOUNT. In our example here we want to have the primary-key to ACCOUNT to include the foreign-key. To do this we need to modify the classes slightly, adding primary-key fields to both classes, and use "application-identity" for both.

public class Account

{

long id;

Set<Address> addresses;

...

}

public class Address

{

long id;

Account account;

...

}In addition we need to define primary key classes for our Account and Address classes

public class Account

{

long id; // PK field

Set addresses = new HashSet();

... (remainder of Account class)

/**

* Inner class representing Primary Key

*/

public static class PK implements Serializable

{

public long id;

public PK()

{

}

public PK(String s)

{

this.id = Long.valueOf(s).longValue();

}

public String toString()

{

return "" + id;

}

public int hashCode()

{

return (int)id;

}

public boolean equals(Object other)

{

if (other != null && (other instanceof PK))

{

PK otherPK = (PK)other;

return otherPK.id == this.id;

}

return false;

}

}

}

public class Address

{

long id;

Account account;

.. (remainder of Address class)

/**

* Inner class representing Primary Key

*/

public static class PK implements Serializable

{

public long id; // Same name as real field above

public Account.PK account; // Same name as the real field above

public PK()

{

}

public PK(String s)

{

StringTokenizer token = new StringTokenizer(s,"::");

this.id = Long.valueOf(token.nextToken()).longValue();

this.account = new Account.PK(token.nextToken());

}

public String toString()

{

return "" + id + "::" + this.account.toString();

}

public int hashCode()

{

return (int)id ^ account.hashCode();

}

public boolean equals(Object other)

{

if (other != null && (other instanceof PK))

{

PK otherPK = (PK)other;

return otherPK.id == this.id && this.account.equals(otherPK.account);

}

return false;

}

}

}To achieve what we want with the datastore schema we define the MetaData like this

<package name="mydomain">

<class name="Account" identity-type="application" objectid-class="Account$PK">

<field name="id" primary-key="true"/>

<field name="firstName" persistence-modifier="persistent">

<column length="50" jdbc-type="VARCHAR"/>

</field>

<field name="secondName" persistence-modifier="persistent">

<column length="50" jdbc-type="VARCHAR"/>

</field>

<field name="addresses" persistence-modifier="persistent" mapped-by="account">

<collection element-type="Address"/>

</field>

</class>

<class name="Address" identity-type="application" objectid-class="Address$PK">

<field name="id" primary-key="true"/>

<field name="account" persistence-modifier="persistent" primary-key="true">

<column name="ACCOUNT_ID"/>

</field>

<field name="city" persistence-modifier="persistent">

<column length="50" jdbc-type="VARCHAR"/>

</field>

<field name="street" persistence-modifier="persistent">

<column length="50" jdbc-type="VARCHAR"/>

</field>

</class>

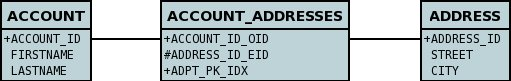

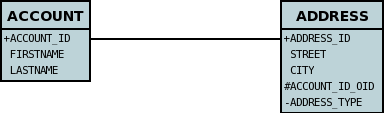

</package>So now we have the following datastore schema

Things to note :-

-

You must use "application-identity" in both parent and child classes

-

In the child Primary Key class, you must have a field with the same name as the relationship in the child class, and the field in the child Primary Key class must be the same type as the Primary Key class of the parent

-

See also the general instructions for Primary Key classes

-

If we had omitted the "id" field from "Address" it would have only been possible to have one "Address" in the "Account" "addresses" collection due to PK constraints. For that reason we have the "id" field too.

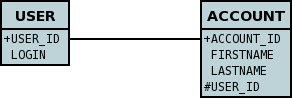

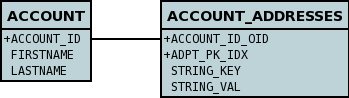

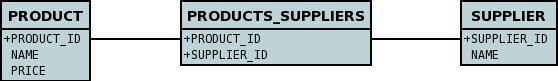

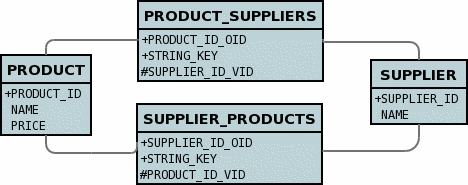

1-N Map Relationship

Lets take the same classes as we have in the 1-N Relationships (FK). In this guide we note that in the datastore representation of the Account and Address classes the ADDRESS table has a primary key as well as a foreign-key to ACCOUNT. In our example here we want to have the primary-key to ACCOUNT to include the foreign-key. To do this we need to modify the classes slightly, adding primary-key fields to both classes, and use "application-identity" for both.

public class Account

{

long id;

Map<String, Address> addresses;

...

}

public class Address

{

long id;

String alias;

Account account;

...

}In addition we need to define primary key classes for our Account and Address classes

public class Account

{

long id; // PK field

Set addresses = new HashSet();

... (remainder of Account class)

/**

* Inner class representing Primary Key

*/

public static class PK implements Serializable

{

public long id;

public PK()

{

}

public PK(String s)

{

this.id = Long.valueOf(s).longValue();

}

public String toString()

{

return "" + id;

}

public int hashCode()

{

return (int)id;

}

public boolean equals(Object other)

{

if (other != null && (other instanceof PK))

{

PK otherPK = (PK)other;

return otherPK.id == this.id;

}

return false;

}

}

}

public class Address

{

String alias;

Account account;

.. (remainder of Address class)

/**

* Inner class representing Primary Key

*/

public static class PK implements Serializable

{

public String alias; // Same name as real field above

public Account.PK account; // Same name as the real field above

public PK()

{

}

public PK(String s)

{

StringTokenizer token = new StringTokenizer(s,"::");

this.alias = Long.valueOf(token.nextToken()).longValue();

this.account = new Account.PK(token.nextToken());

}

public String toString()

{

return alias + "::" + this.account.toString();

}

public int hashCode()

{

return alias.hashCode() ^ account.hashCode();

}

public boolean equals(Object other)

{

if (other != null && (other instanceof PK))

{

PK otherPK = (PK)other;

return otherPK.alias.equals(this.alias) && this.account.equals(otherPK.account);

}

return false;

}

}

}To achieve what we want with the datastore schema we define the MetaData like this

<package name="com.mydomain">

<class name="Account" objectid-class="Account$PK">

<field name="id" primary-key="true"/>

<field name="firstname" persistence-modifier="persistent">

<column length="100" jdbc-type="VARCHAR"/>

</field>

<field name="lastname" persistence-modifier="persistent">

<column length="100" jdbc-type="VARCHAR"/>

</field>

<field name="addresses" persistence-modifier="persistent" mapped-by="account">

<map key-type="java.lang.String" value-type="com.mydomain.Address"/>

<key mapped-by="alias"/>

</field>

</class>

<class name="Address" objectid-class="Address$PK>

<field name="account" persistence-modifier="persistent" primary-key="true"/>

<field name="alias" null-value="exception" primary-key="true">

<column name="KEY" length="20" jdbc-type="VARCHAR"/>

</field>

<field name="city" persistence-modifier="persistent">

<column length="50" jdbc-type="VARCHAR"/>

</field>

<field name="street" persistence-modifier="persistent">

<column length="50" jdbc-type="VARCHAR"/>

</field>

</class>

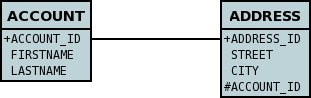

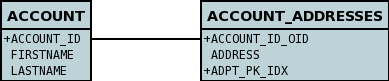

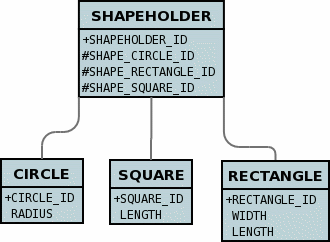

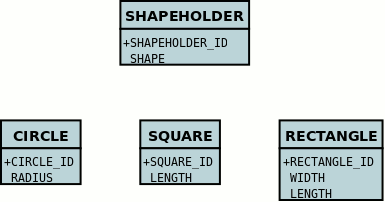

</package>So now we have the following datastore schema

Things to note :-

-

You must use "application-identity" in both parent and child classes

-

In the child Primary Key class, you must have a field with the same name as the relationship in the child class, and the field in the child Primary Key class must be the same type as the Primary Key class of the parent

-

See also the general instructions for Primary Key classes

-

If we had omitted the "alias" field from "Address" it would have only been possible to have one "Address" in the "Account" "addresses" collection due to PK constraints. For that reason we have the "alias" field too as part of the PK.

Versioning

JDO allows objects of classes to be versioned. The version is typically used as a way of detecting if the object has been updated by another thread or PersistenceManager since retrieval using the current PersistenceManager - for use by Optimistic Locking. JDO defines several "strategies" for generating the version of an object. The strategy has the following possible values

-

none stores a number like the version-number but will not perform any optimistic checks.

-

version-number stores a number (starting at 1) representing the version of the object.

-

date-time stores a temporal representing the time at which the object was last updated. Note that not all RDBMS store milliseconds in a Timestamp!

-

state-image stores a Long value being the hash code of all fields of the object. DataNucleus doesnt currently support this option

Versioning using a surrogate column

The default JDO mechanism for versioning of objects in RDBMS datastores is via a surrogate column in the table of the class. In the MetaData you specify the details of the surrogate column and the strategy to be used. For example

<package name="mydomain">

<class name="User" table="USER">

<version strategy="version-number" column="VERSION"/>

<field name="name" column="NAME"/>

...

</class>

</package>alternatively using annotations

@PersistenceCapable

@Version(strategy=VersionStrategy.VERSION_NUMBER, column="VERSION")

public class MyClass

{

...

}The specification above will create a table with an additional column called VERSION that will store the version of the object.

Versioning using a field/property of the class

DataNucleus provides a valuable extension to JDO whereby you can have a field of your class store the version of the object. This equates to JPA’s default versioning process whereby you have to have a field present. To do this, lets take a class

public class User

{

String name;

...

long myVersion;

}and we want to store the version of the object in the field "myVersion". So we specify the metadata as follows

<package name="mydomain">

<class name="User" table="USER">

<version strategy="version-number">

<extension vendor-name="datanucleus" key="field-name" value="myVersion"/>

</version>

<field name="name" column="NAME"/>

...

<field name="myVersion" column="VERSION"/>

</class>

</package>alternatively using annotations

@PersistenceCapable

@Version(strategy=VersionStrategy.VERSION_NUMBER, column="VERSION",

extensions={@Extension(vendorName="datanucleus", key="field-name", value="myVersion")})

public class MyClass

{

protected long myVersion;

...

}and so now objects of our class will have access to the version via the "myVersion" field.

| The field must be of one of the following types : int, long, short, java.lang.Integer, java.lang.Long, java.lang.Short, java.sql.Timestamp, java.sql.Date, java.sql.Time, java.util.Date, java.util.Calendar, java.time.Instant. |

Auditing

| Applicable to RDBMS |

With standard JDO you have no annotations available to automatically add timestamps and user names into the datastore against each record when it is persisted or updated. Whilst you can do this manually, setting the field(s) in prePersist callbacks etc, DataNucleus provides some simple annotations to make it simpler still.

import org.datanucleus.api.jdo.annotations.CreateTimestamp;

import org.datanucleus.api.jdo.annotations.CreateUser;

import org.datanucleus.api.jdo.annotations.UpdateTimestamp;

import org.datanucleus.api.jdo.annotations.UpdateUser;

@PersistenceCapable

public class Hotel

{

@CreateTimestamp

Timestamp createTimestamp;

@CreateUser

String createUser;

@UpdateTimestamp

Timestamp updateTimestamp;

@UpdateUser

String updateUser;

...

}In the above example we have 4 fields in the class that will have columns in the datastore. The field createTimestamp and createUser will be persisted at INSERT with the Timestamp and current user for the insert. The field updateTimestamp and updateUser will be persisted whenever any update is made to the object in the datastore, with the Timestamp and current user for the update.

If you instead wanted to define this in XML then do it like this

<class name="Hotel">

<field name="createTimestamp">

<extension vendor-name="datanucleus" key="create-timestamp" value="true"/>

</field>

<field name="createUser">

<extension vendor-name="datanucleus" key="create-user" value="true"/>

</field>

<field name="updateTimestamp">

<extension vendor-name="datanucleus" key="update-timestamp" value="true"/>

</field>

<field name="updateUser">

<extension vendor-name="datanucleus" key="update-user" value="true"/>

</field>

</class>

Any field marked as @CreateTimestamp / @UpdateTimestamp needs to be of type java.sql.Timestamp or java.time.Instant.

|

Defining the Current User

The timestamp can be automatically generated for population here, but clearly the current user is not available as a standard, and so we have to provide a mechanism for setting it. You have 2 ways to do this; choose the one that is most appropriate to your situation

-

Specify the persistence property datanucleus.CurrentUser on the PMF to be the current user to use. Optionally you can also specify the same persistence property on each PM if you have a particular user for each PM.

-

Define an implementation of the DataNucleus interface org.datanucleus.store.schema.CurrentUserProvider, and specify it on PMF creation using the property datanucleus.CurrentUserProvider. This is defined as follows

public interface CurrentUserProvider

{

/** Return the current user. */

String currentUser();

}So you could, for example, store the current user in a thread-local and return it via your implementation of this interface.

Full Traceability Auditing

DataNucleus doesn’t currently provide a full traceability auditing capability, whereby you can track all changes to every relevant field. This would involve having a mirror table for each persistable class and, for each insert/update of an object, would require 2 SQL statements to be issued. The obvious consequence would be to slow down the persistence process.

Should your organisation require this, we could work with you to provide it. Please contact us if interested.

Fields/Properties

Once we have defined a class to be persistable, we need to define how to persist the different fields/properties that are to be persisted. There are two distinct modes of persistence definition; the most common uses fields, whereas an alternative uses properties.

Persistent Fields

The most common form of persistence is where you have a field in a class and want to persist it to the datastore. With this mode of operation DataNucleus will persist the values stored in the fields into the datastore, and will set the values of the fields when extracting it from the datastore.

| Requirement : you have a field in the class. This can be public, protected, private or package access, but cannot be static or final. |

Almost all Java field types are default persistent (if DataNucleus knows how to persist a type then it defaults to persistent)

so there is no real need to specify @Persistent to make the field persistent.

An example of how to define the persistence of a field is shown below

@PersistenceCapable

public class MyClass

{

@Persistent

Date birthday;

@NotPersistent

String someOtherField;

}So, using annotations, we have marked the field birthday as persistent, whereas field someOtherField is declared as not persisted.

Please note that in this particular case, Date is by default persistent so we could omit the @Persistent annotation

(with non-default-persistent types we would definitely need the annotation). Using XML MetaData we would have done

<class name="MyClass">

<field name="birthday" persistence-modifier="persistent"/>

<field name="someOtherField" persistence-modifier="none"/>

</class>Please note that the field Java type defines whether it is, by default, persistable.

Persistent Properties

A second mode of operation is where you have Java Bean-style getter/setter for a property. In this situation you want to persist the output from getXXX to the datastore, and use the setXXX to load up the value into the object when extracting it from the datastore.

| Requirement : you have a property in the class with Java Bean getter/setter methods. These methods can be public, protected, private or package access, but cannot be static. The class must have BOTH getter AND setter methods. |

| The JavaBean specification is to have a getter method with signature {type} getMyField() and a setter method with signature void setMyField({type} arg), where the property name is then myField, and the type is {type}. |

An example of how to define the persistence of a property is shown below

@PersistenceCapable

public class MyClass

{

@Persistent

Date getBirthday()

{

...

}

void setBirthday(Date date)

{

...

}

}So, using annotations, we have marked this class as persistent, and the getter is marked as persistent. By default a property is non-persistent, so we have no need in specifying the someOtherField as not persistent. Using XML MetaData we would have done

<class name="MyClass">

<property name="birthday" persistence-modifier="persistent"/>

</class>Overriding Superclass Field/Property MetaData

If you are using XML MetaData you can also override the MetaData for fields/properties of superclasses. You do this by adding an entry for {class-name}.fieldName, like this

<class name="Hotel" detachable="true">

...

<field name="HotelSuperclass.someField" default-fetch-group="false"/>

</class>so we have changed the field "someField" specified in the persistent superclass "HotelSuperclass" to not be part of the DFG.

Making a field/property non-persistent

If you have a field/property that you don’t want to persist, just mark it’s persistence-modifier as none, like this

@NotPersistent

String unimportantField;or with XML

<class name="mydomain.MyClass">

<field name="unimportantField" persistence-modifier="none"/>

</class>Making a field/property read-only

If you want to make a member read-only you can do it like this.

<jdo>

<package name="mydomain">

<class name="MyClass">

<field name="myField">

<extension vendor-name="datanucleus" key="insertable" value="false"/>

<extension vendor-name="datanucleus" key="updateable" value="false"/>

</field>

</class>

</package>

</jdo>and with annotations

@PersistenceCapable

public class MyClass

{

@Extension(vendorName="datanucleus", key="insertable", value="false")

@Extension(vendorName="datanucleus", key="updateable", value="false")

String myField;

}alternatively using a DataNucleus convenience annotation

import org.datanucleus.api.jdo.annotations.ReadOnly;

@PersistenceCapable

public class MyClass

{

@ReadOnly

String myField;

}Field Types

When persisting a class, a persistence solution needs to know how to persist the types of each field in the class. Clearly a persistence solution can only support a finite number of Java types; it cannot know how to persist every possible type creatable. The JDO specification define lists of types that are required to be supported by all implementations of those specifications. This support can be conveniently split into two parts

-

First-Class (FCO) Types : An object that can be referred to (object reference, providing a relation) and that has an "identity" is termed a First Class Object (FCO). DataNucleus supports the following Java types as FCO :

-

persistable : any class marked for persistence can be persisted with its own identity in the datastore

-

interface where the field represents a persistable object

-

java.lang.Object where the field represents a persistable object

-

-

Second-Class (SCO) Types : An object that does not have an "identity" is termed a Second Class Object (SCO). This is something like a String or Date field in a class, or alternatively a Collection (that contains other objects). The sections below shows the currently supported SCO java types in DataNucleus. The tables in these sections show

-

default-fetch-group (DFG) : whether the field is retrieved by default when retrieving the object itself

-

proxy : whether the field is represented by a "proxy" that intercepts any operations to detect whether it has changed internally.

-

primary-key : whether the field can be used as part of the primary-key

-

| First-Class Types (relation fields) are not present in the default fetch group. |

| With DataNucleus, all types that we have a way of persisting (i.e listed below) are default persistent (meaning that you don’t need to annotate them in any way to persist them). The only field types where this is not always true is for java.lang.Object, some Serializable types, array of persistables, and java.io.File so always safer to mark those as persistent. |

Where you have a secondary type that can be persisted in multiple possible ways you select which column type(s) by using the jdbc-type for the field, or alternatively you find the name of the internal DataNucleus TypeConverter and use that via the metadata extension "type-converter-name".

If you have support for any additional types and would either like to contribute them, or have them listed here, let us know. Supporting a new type is easy, typically involving a JDO AttributeConverter if you can easily convert the type into a String or Long.

You can add support for a Java type using the

the Java Types  . .

|

You can also define more specific support for it with RDBMS datastores using the

the RDBMS Java Types  . .

|

Handling of second-class types uses wrappers and bytecode enhancement with DataNucleus. This contrasts to what Hibernate uses (proxies), and what Hibernate imposes on you.

| When your field type is a type that is mutable it will be replaced by a "wrapper" when the owning object is managed. By default this wrapper type will be based on the instantiated type. You can change this to use the declared type by setting the persistence property datanucleus.type.wrapper.basis to declared. |

Primitive and java.lang Types

All primitive types and wrappers are supported and will be persisted into a single database "column". Arrays of these are also supported, and can either be serialised into a single column, or persisted into a join table (dependent on datastore).

| Java Type | DFG? | Proxy? | PK? | Comments |

|---|---|---|---|---|

boolean |

Persisted as BOOLEAN, Integer (i.e 1,0), String (i.e 'Y','N'). |

|||

byte |

||||

char |

||||

double |

||||

float |

||||

int |

||||

long |

||||

short |

||||

java.lang.Boolean |

Persisted as BOOLEAN, Integer (i.e 1,0), String (i.e 'Y','N'). |

|||

java.lang.Byte |

||||

java.lang.Character |

||||

java.lang.Double |

||||

java.lang.Float |

||||

java.lang.Integer |

||||

java.lang.Long |

||||

java.lang.Short |

||||

java.lang.Number |

Persisted in a column capable of storing a BigDecimal, and will store to the precision of the object to be persisted. On reading back the object will be returned typically as a BigDecimal since there is no mechanism for determing the type of the object that was stored. |

|||

java.lang.String |

||||

java.lang.StringBuffer |

Persisted as String. The dirty check mechanism for this type is limited to immutable mode, which means if you change a StringBuffer object field, you must reassign it to the owner object field to make sure changes are propagated to the database. |

|||

java.lang.StringBuilder |

Persisted as String. The dirty check mechanism for this type is limited to immutable mode, which means if you change a StringBuffer object field, you must reassign it to the owner object field to make sure changes are propagated to the database. |

|||

java.lang.Class |

Persisted as String. |

java.math types

BigInteger and BigDecimal are supported and persisted into a single numeric column by default.

| Java Type | DFG? | Proxy? | PK? | Comments |

|---|---|---|---|---|

java.math.BigDecimal |

Persisted as DOUBLE or String. String can be used to retain precision. |

|||

java.math.BigInteger |

Persisted as INTEGER or String. String can be used to retain precision. |

Temporal Types (java.util, java.sql, java.time, Jodatime)

DataNucleus supports a very wide range of temporal types, with flexibility in how they are persisted.

| Java Type | DFG? | Proxy? | PK? | Comments |

|---|---|---|---|---|

java.sql.Date |

Persisted as DATE, String, DATETIME or Long. |

|||

java.sql.Time |

Persisted as TIME, String, DATETIME or Long. |

|||

java.sql.Timestamp |

Persisted as TIMESTAMP, String or Long. |

|||

java.util.Calendar |

Persisted as TIMESTAMP (inc Timezone), DATETIME, String, or as (Long, String) storing millis + timezone respectively |

|||

java.util.GregorianCalendar |

Persisted as TIMESTAMP (inc Timezone), DATETIME, String, or as (Long, String) storing millis + timezone respectively |

|||

java.util.Date |

Persisted as DATETIME, String or Long. |

|||

java.util.TimeZone |

Persisted as String. |

|||

java.time.LocalDateTime |

Persisted as Timestamp, String, or DATETIME. |

|||

java.time.LocalTime |

Persisted as TIME, String, or Long. |

|||

java.time.LocalDate |

Persisted as DATE, String, or DATETIME. |

|||

java.time.OffsetDateTime |

Persisted as Timestamp, String, or DATETIME. |

|||

java.time.OffsetTime |

Persisted as TIME, String, or Long. |

|||

java.time.MonthDay |

Persisted as String, DATE, or as (Integer,Integer) with the latter being month+day respectively. |

|||

java.time.YearMonth |

Persisted as String, DATE, or as (Integer,Integer) with the latter being year+month respectively. |

|||

java.time.Year |

Persisted as Integer, or String. |

|||

java.time.Period |

Persisted as String. |

|||

java.time.Instant |

Persisted as TIMESTAMP, String, Long, or DATETIME. |

|||

java.time.Duration |

Persisted as String, Double (secs.nanos), or Long (secs). |

|||

java.time.ZoneId |

Persisted as String. |

|||

java.time.ZoneOffset |

Persisted as String. |

|||

java.time.ZonedDateTime |

Persisted as Timestamp, or String. |

|||

org.joda.time.DateTime |

Requires datanucleus-jodatime plugin. Persisted as TIMESTAMP or String. |

|||

org.joda.time.LocalTime |

Requires datanucleus-jodatime plugin. Persisted as TIME or String. |

|||

org.joda.time.LocalDate |

Requires datanucleus-jodatime plugin. Persisted as DATE or String. |

|||

org.joda.time.LocalDateTime |

Requires datanucleus-jodatime plugin. Persisted as TIMESTAMP, or String. |

|||

org.joda.time.Duration |

Requires datanucleus-jodatime plugin. Persisted as String or Long. |

|||

org.joda.time.Interval |

Requires datanucleus-jodatime plugin. Persisted as String or (TIMESTAMP, TIMESTAMP). |

|||

org.joda.time.Period |

Requires datanucleus-jodatime plugin. Persisted as String. |

Collection/Map types

DataNucleus supports a very wide range of collection, list and map types.

| Java Type | DFG? | Proxy? | PK? | Comments |

|---|---|---|---|---|

java.util.Collection |

||||

java.util.List |

||||

java.util.Map |

||||

java.util.Queue |

The comparator is specifiable via the metadata extension comparator-name (see below). See the 1-N Mapping Guide |

|||

java.util.Set |

||||

java.util.SortedMap |

The comparator is specifiable via the metadata extension comparator-name (see below). See the 1-N Mapping Guide |

|||

java.util.SortedSet |

The comparator is specifiable via the metadata extension comparator-name (see below). See the 1-N Mapping Guide |

|||

java.util.ArrayList |

||||

java.util.BitSet |

Persisted as collection by default, but will be stored as String when the datastore doesn’t provide for collection storage |

|||

java.util.HashMap |

||||

java.util.HashSet |

||||

java.util.Hashtable |

||||

java.util.LinkedHashMap |

Persisted as a Map currently. No List-ordering is supported. See the 1-N Mapping Guide |

|||

java.util.LinkedHashSet |

Persisted as a Set currently. No List-ordering is supported. See the 1-N Mapping Guide |

|||

java.util.LinkedList |

||||

java.util.Properties |

||||

java.util.PriorityQueue |

The comparator is specifiable via the metadata extension comparator-name (see below). See the 1-N Mapping Guide |

|||

java.util.Stack |

||||

java.util.TreeMap |

The comparator is specifiable via the metadata extension comparator-name (see below). See the 1-N Mapping Guide |

|||

java.util.TreeSet |

The comparator is specifiable via the metadata extension comparator-name (see below). See the 1-N Mapping Guide |

|||

java.util.Vector |

||||

com.google.common.collect.Multiset |

Requires datanucleus-guava plugin. See the 1-N Mapping Guide |

Collection Comparators

Collections that support a Comparator to order the elements of the set can specify it in metadata like this.

@Element

@Extension(vendorName="datanucleus", key="comparator-name", value="mydomain.model.MyComparator")

SortedSet<MyElementType> elements;When instantiating the SortedSet field it will create it with a comparator of the specified class (which must have a default constructor).

Enums

DataNucleus supports persisting Enums, and they can be stored as either the ordinal (numeric column) or name (String column).

| Java Type | DFG? | Proxy? | PK? | Comments |

|---|---|---|---|---|

java.lang.Enum |

Persisted as String (name) or int (ordinal). Specified via jdbc-type. |

Enum custom values

A DataNucleus extension to this is where you have an Enum that defines its own "value"s for the different enum options.

| applicable to RDBMS, MongoDB, Cassandra, Neo4j, HBase, Excel, ODF and JSON currently. |

public enum MyColour

{

RED((short)1), GREEN((short)3), BLUE((short)5), YELLOW((short)8);

private short value;

private MyColour(short value)

{

this.value = value;

}

public short getValue()

{

return value;

}

}With the default persistence it would persist as String-based, so persisting "RED" "GREEN" "BLUE" etc. With jdbc-type as INTEGER it would persist 0, 1, 2, 3 being the ordinal values. If you define the metadata as

@Extension(vendorName="datanucleus", key="enum-value-getter", value="getValue")